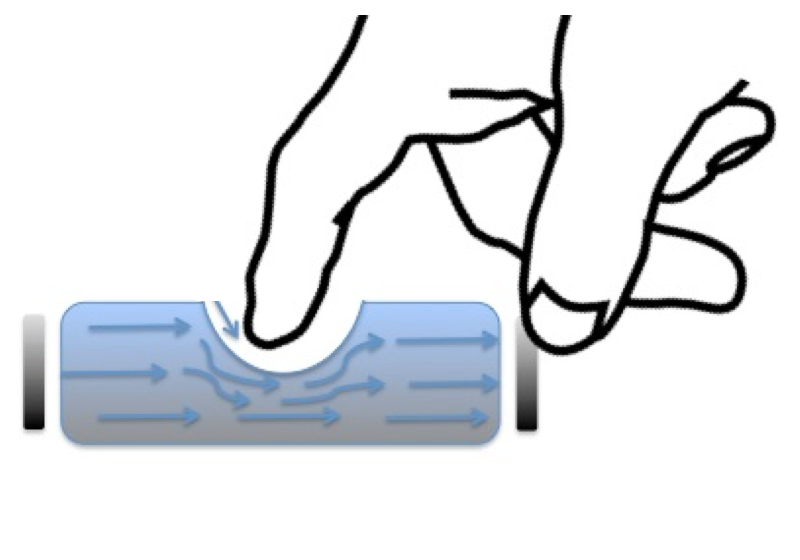

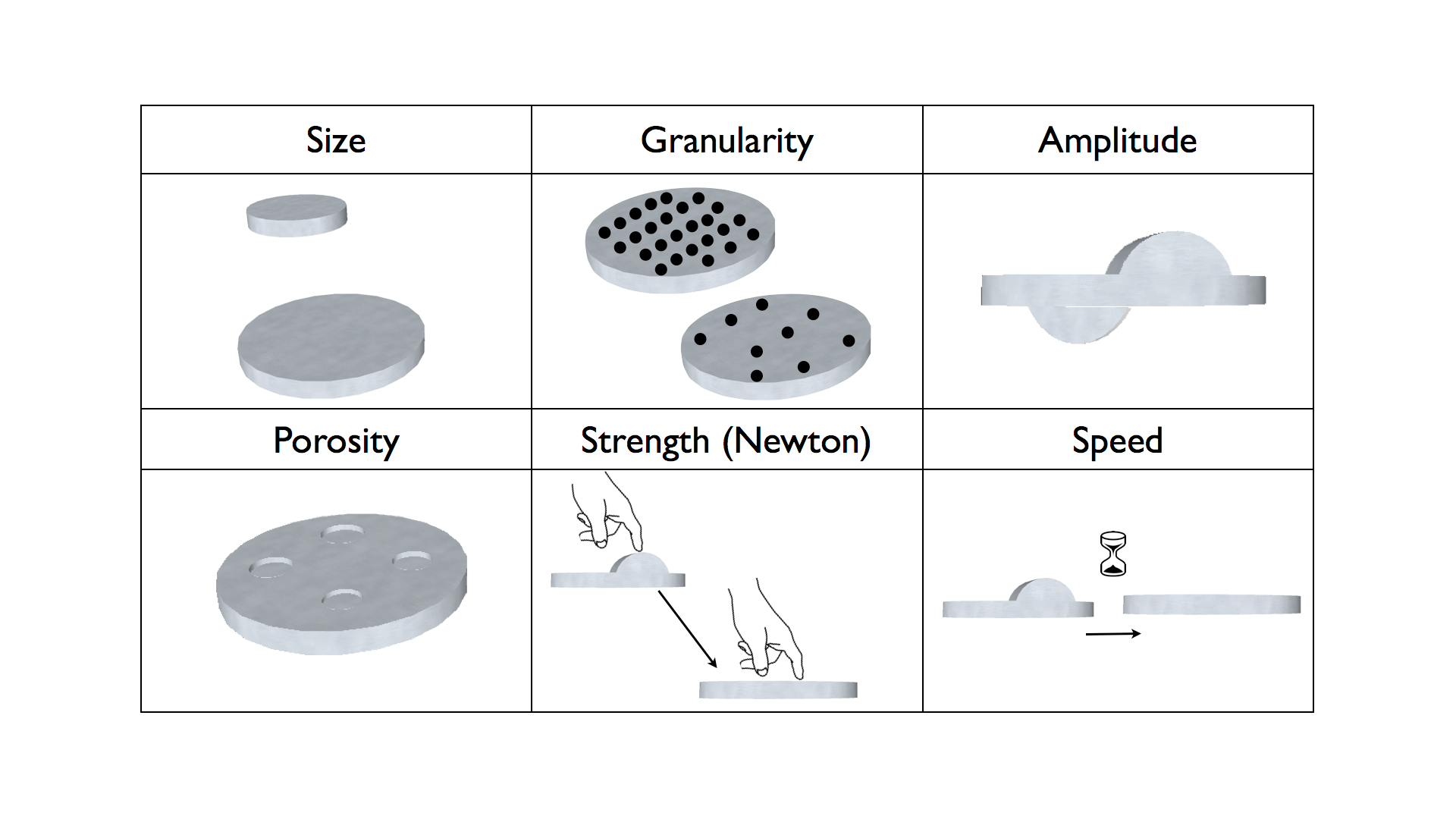

Smart materials are crucial for the development of more expressive, flexible and robust devices but we do not know yet which types are most suitable or how to construct effective inter- faces with them.

Most current HCI research tries to mimic smart materials through mechanical implementations based on non-smart materials. These tend to be difficult to construct, power-hungry, and unreliable. Most smart material research takes place in Physics, Chemistry, and Engineering labs, and the materials are rarely applied to interactive devices despite their potential to radically change the nature of human-computer interaction. I’m working on bridging this gap so that smart materials are readily available for researchers outside the Chemistry, and Engineering labs. This will allow researchers to concentrate on more sophisticated and robust implementations of shape-changing interfaces using these materials.